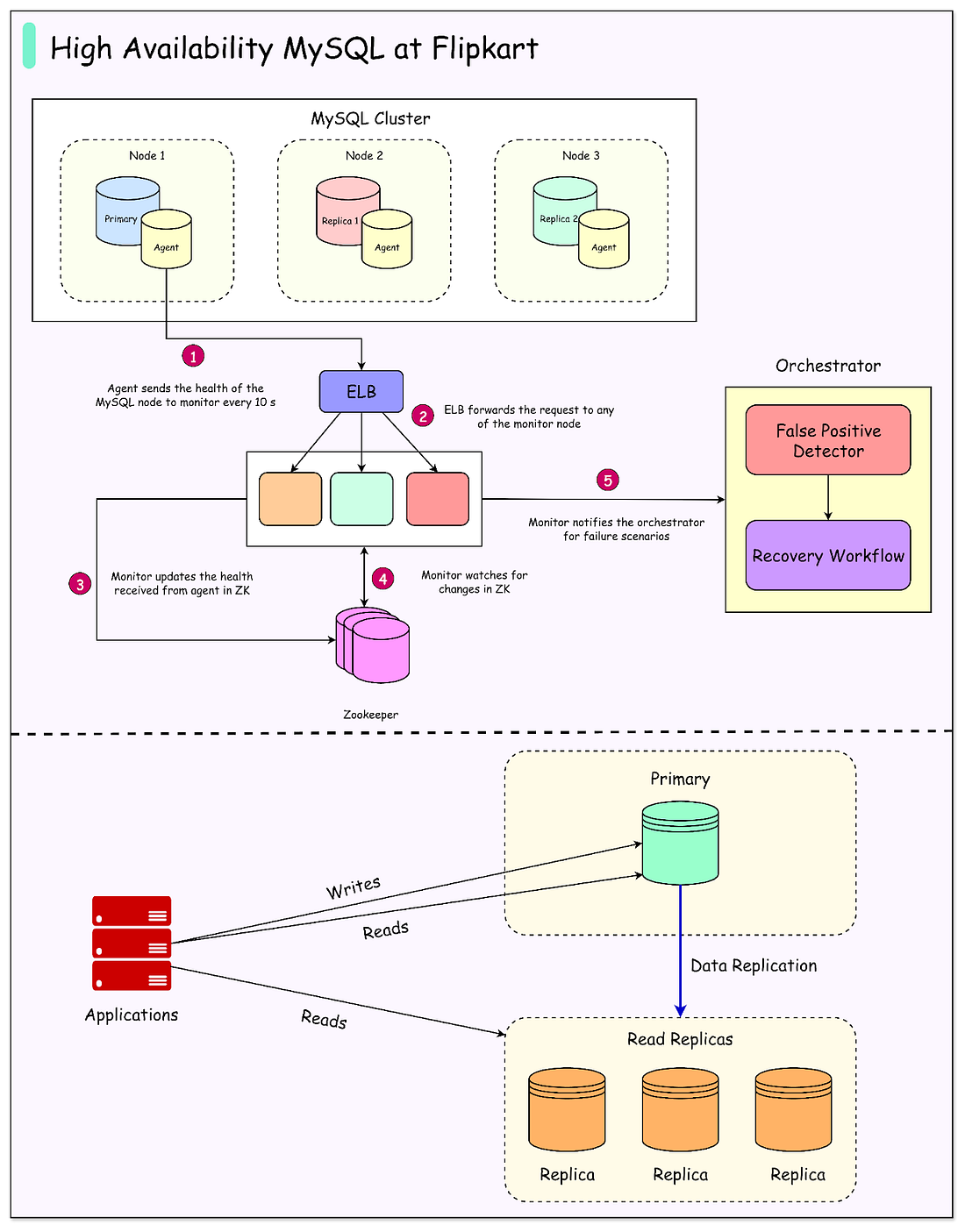

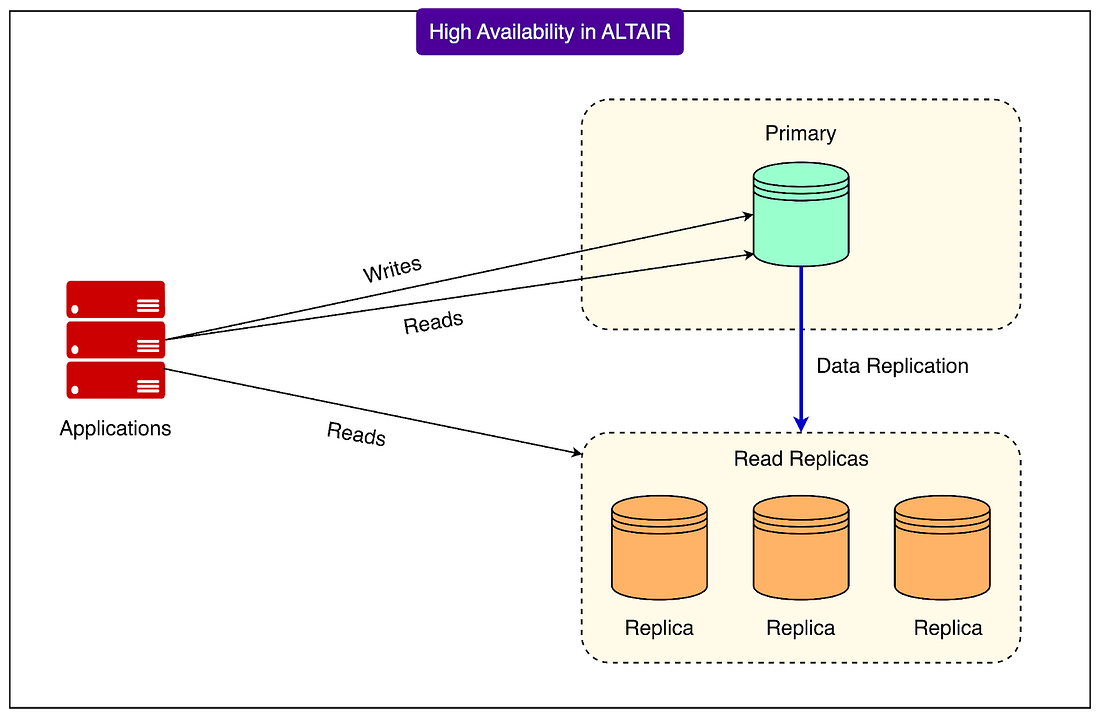

Rust rewrites, trends, and what’s next for Rust at P99 CONF (free + virtual) (Sponsored)P99 CONF is the technical conference for anyone who obsesses over high-performance, low-latency applications. Naturally, Rust is a core topic. How is Rust being applied to solve today’s low latency challenges – and where it could be heading next? That’s what experts from Clickhouse, Prime Video, Neon, Datadog, and more will be exploring Join 20K of your peers for an unprecedented opportunity to learn from engineers at Pinterest, Gemini, Arm, Rivian and VW Group Technology, Meta, Wayfair, Disney, Uber, NVIDIA, and more – for free, from anywhere. Bonus: Registrants can win 500 free swag packs and get 30-day access to the complete O’Reilly library. Disclaimer: The details in this post have been derived from the official documentation shared online by the Flipkart Engineering Team. All credit for the technical details goes to the Flipkart Engineering Team. The links to the original articles and sources are present in the references section at the end of the post. We’ve attempted to analyze the details and provide our input about them. If you find any inaccuracies or omissions, please leave a comment, and we will do our best to fix them. Flipkart is one of India’s largest e-commerce platforms with over 500 million users and 150-200 million daily users. It handles extreme surges in traffic during events like its Big Billion Days sale. To keep the business running smoothly, the company relies on thousands of microservices that cover every part of its operations, from order management to logistics and supply chain systems. Among these systems, the most critical transactional domains depend on MySQL because it provides the durability and ACID guarantees that e-commerce workloads demand. However, managing MySQL at Flipkart’s scale presented serious challenges. Each engineering team often operated its own database clusters, resulting in uneven practices, duplicated effort, and a high operational burden. This complexity was most visible during peak shopping periods, when even small inefficiencies could cascade into major disruptions. To solve this, the Flipkart engineering team built Altair, an internally managed service designed to offer MySQL with high availability (HA) as a standard feature. Altair’s purpose is to ensure that the company’s most important databases remain consistently available for writes, while also reducing the manual work required by teams to keep them healthy. In practice, this means that Flipkart engineers can focus more on building services while relying on Altair to handle the heavy lifting of database failover, recovery, and availability management. In this article, we will look at how Altair works under the hood, the technical decisions Flipkart made to balance availability and consistency, and the engineering trade-offs that come with running relational databases at a massive scale. Help us Make ByteByteGo Newsletter BetterTL:DR: Take this 2-minute survey so I can learn more about who you are,. what you do, and how I can improve ByteByteGo High Availability Model at FlipkartFlipkart’s Altair system uses a primary–replica setup to keep MySQL highly available. In this model, there is always one primary database that accepts all the writes. This primary may also handle some reads. Alongside it are one or more replicas. These replicas continuously copy data from the primary in an asynchronous manner, which means there can be a small delay before changes appear on them. Replicas usually handle most of the read traffic, while the primary focuses on writes. The main goal of this setup is simple: if the primary fails, the system should quickly promote a healthy replica to take its place as the new primary. This ensures that write operations remain available with minimal disruption. Flipkart’s availability target for Altair is very high, close to what is known as “five nines.” That means the system is expected to stay up and running more than 99.999 percent of the time. Of course, no complex system can ever promise perfect uptime, but the goal is to keep downtime as close to zero as possible. To make failover reliable, the Flipkart engineering team considered several important factors:

By combining these elements, Altair is designed to keep MySQL highly available even under failure conditions. End-to-End Failure WorkflowWhen a primary database fails, Altair follows a well-defined sequence of steps to recover and make sure applications can continue writing data. This process is called a failover workflow, and it involves multiple components working together. The workflow has five main stages:

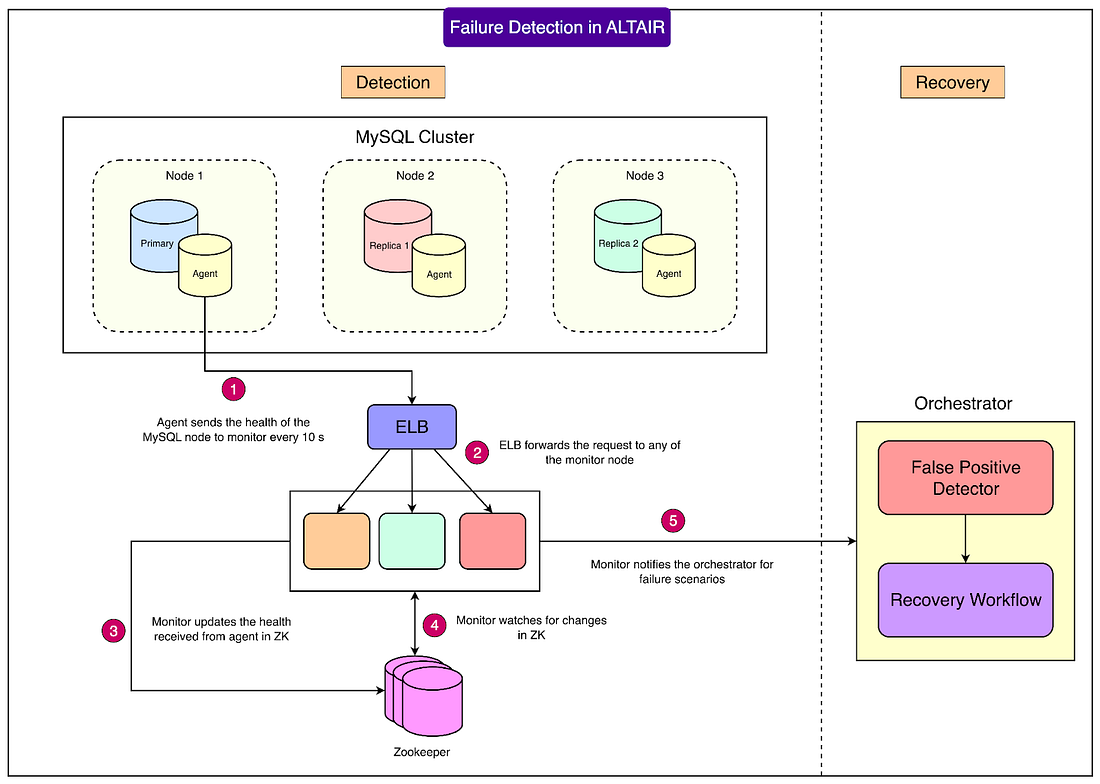

How Failure Detection WorksAltair uses a three-layered monitoring system to detect failures:

See the diagram below: Preventing false alarmsInstead of simply relying on a few missed signals, Altair performs deeper checks:

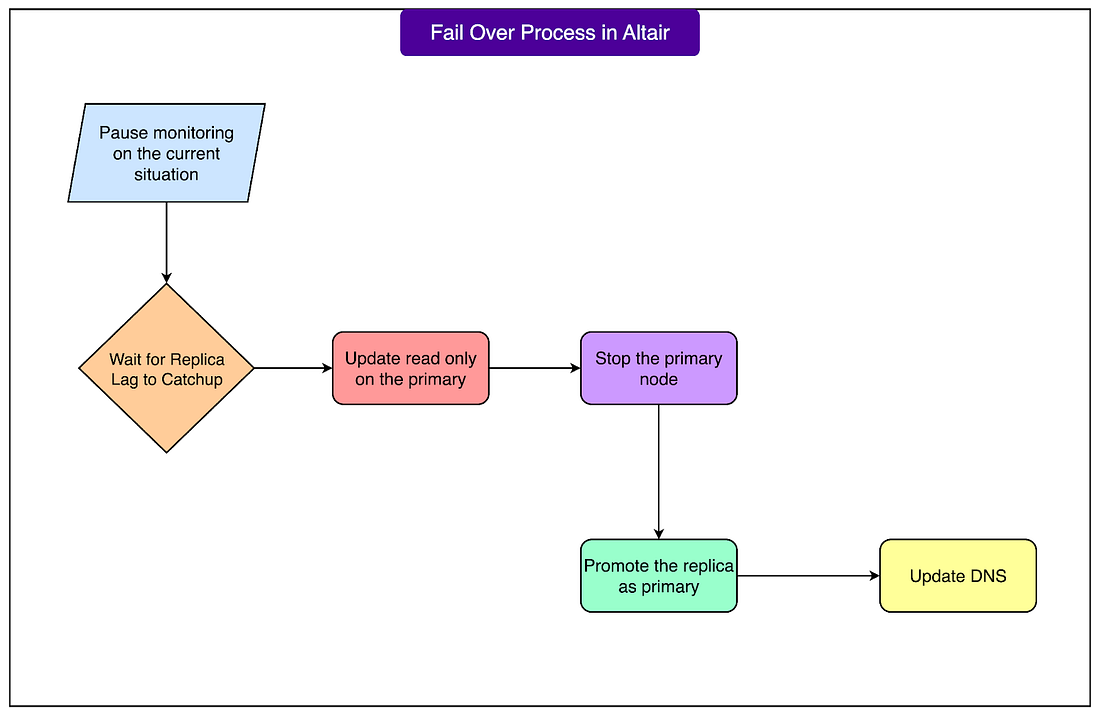

The rule is straightforward: as long as either the orchestrator or at least one replica can connect to the primary, the primary is considered alive. If both fail, the system proceeds with failover. Steps during failoverWhen the orchestrator decides to failover, Altair runs these tasks in order:

See the diagram below: This structured approach ensures that failovers are smooth, data loss is minimized, and applications reconnect to the new primary without manual intervention in most cases. Service DiscoveryOnce a failover is complete, applications need to know where the new primary database is located. Altair solves this using DNS-based service discovery. Here’s how it works:

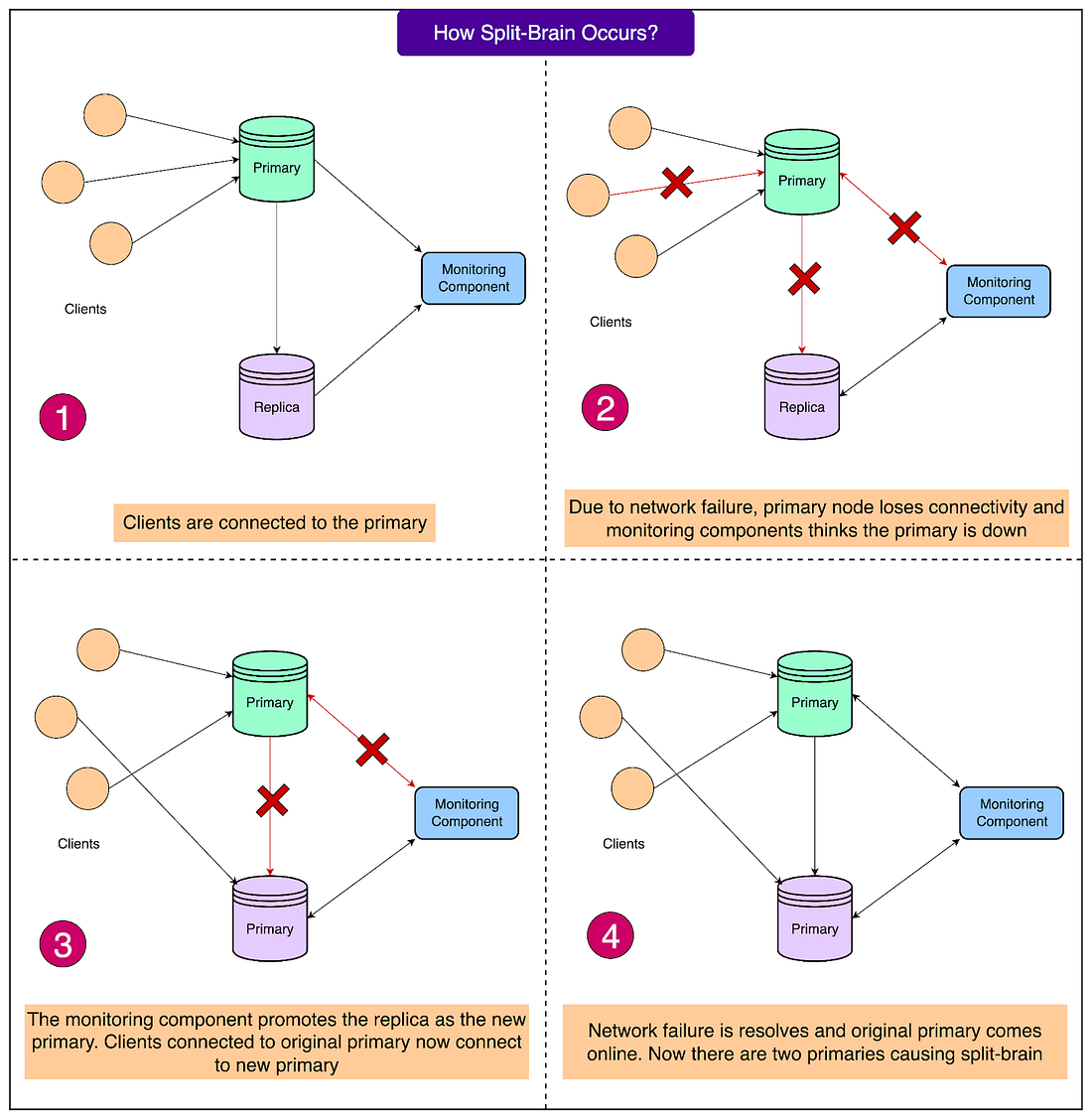

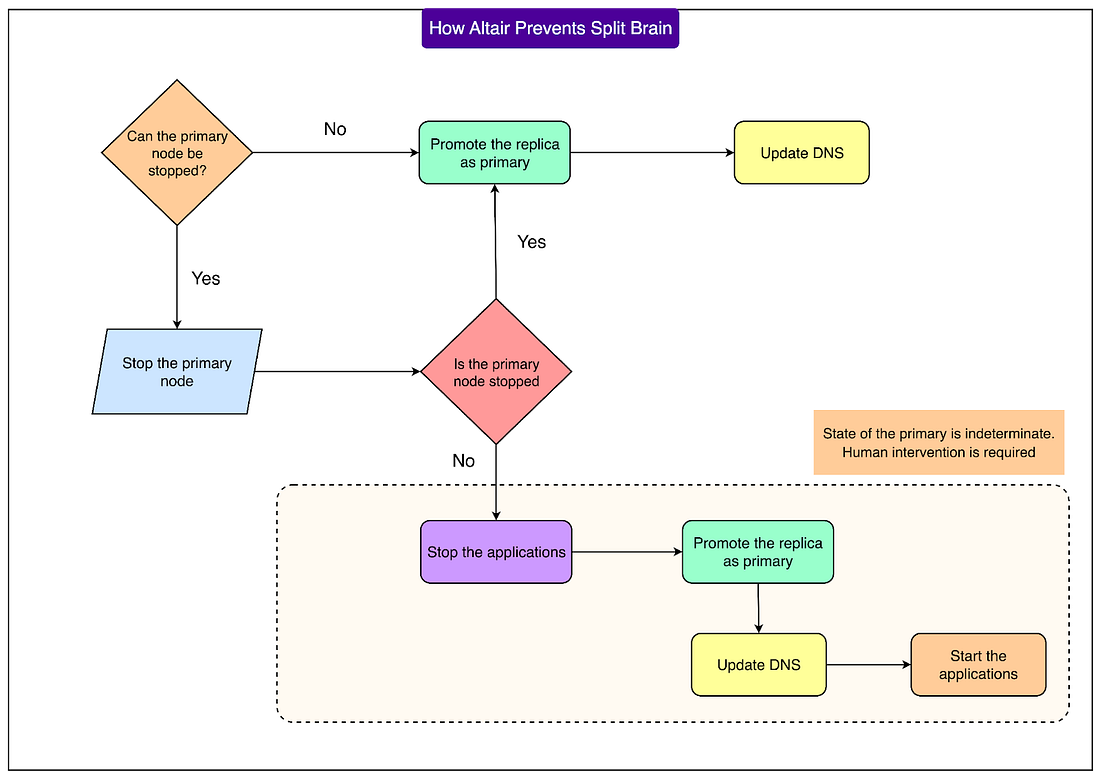

This design means that most applications do not need any manual updates or restarts. As soon as they make a fresh connection, they automatically reach the new primary. The only exceptions are unusual situations where DNS changes are not picked up or where the network is partitioned in a way that requires manual intervention. In those rare cases, Flipkart’s engineering team coordinates with client teams to restart applications and ensure traffic points to the right place. Split-Brain RisksOne of the biggest risks in any high-availability setup is something called split-brain. This happens when two different nodes both think they are the primary at the same time. If both accept writes, the data can diverge and become inconsistent across the cluster. Fixing this later requires painful reconciliation. Split-brain usually occurs during network partitions. Imagine the primary is healthy, but because of a network issue, the rest of the system cannot reach it. From their perspective, it looks dead. A replica is then promoted to primary, while the original primary continues accepting writes. Now there are two primaries. See the diagram below: With MySQL’s asynchronous replication, this problem is even harder to solve because the system cannot guarantee both strong consistency and availability during a network split. Flipkart chooses to prioritize availability, but adds safeguards to prevent split-brain. If split-brain happens, the effects can be serious. Orders might be split across two different databases, leading to confusion for both customers and sellers. Reconciling this data later is time-consuming and costly. Flipkart cites GitHub’s 2018 incident as an example, where a short connectivity problem led to nearly 24 hours of reconciliation work. Altair includes multiple safeguards to defend against a split-brain scenario:

See the diagram below: This process ensures that the risk of having two primaries is avoided, even if it requires a brief pause in availability. Failure Scenarios and How Altair Handles ThemDatabases can fail in many different ways, and each type of failure needs to be handled carefully. Altair is designed to detect different failure scenarios and react appropriately so that downtime is minimized and data remains safe. Let’s go through the major cases and see how Altair deals with each one. 1 - Node (Host) FailureSometimes the entire machine (virtual machine or physical host) running the primary database can go down. In such a case, the local agent running on that machine stops sending health updates. When the monitor does not receive three consecutive 10-second updates (about 30 seconds of silence), it marks the node as unhealthy and alerts the orchestrator. The orchestrator verifies that the node is really unreachable and then triggers a failover, promoting a replica to become the new primary. 2 - MySQL Process FailureEven if the host machine is fine, the MySQL process itself may crash. Here’s what happens in this case:

3 - Network Partition Between Primary and ReplicasSometimes the primary and replicas cannot talk to each other because of a network issue, even though both are still alive. In other words, replicas lose connectivity to the primary. This alone is not enough reason to trigger a failover. The system avoids acting on replica-only signals because the primary may still be healthy and reachable by clients. 4 - Network Partitions Between the Control Plane and PrimaryThis is more complex because Altair’s control plane (monitor and orchestrator) might lose communication with the primary while the primary itself is still running. Altair has to carefully analyze the situation to avoid false failovers. There are three sub-cases:

Design Highlights and Trade-OffsBuilding a system like Altair means balancing several competing goals. The Flipkart engineering team had to make careful choices about what to prioritize and how to design the system so that it worked reliably at scale. Here are the key highlights and trade-offs. Balancing Consistency and AvailabilityMySQL in Altair uses asynchronous replication. This means that replicas copy data from the primary with a slight delay. Because of this, there is always a trade-off:

Flipkart chose to prioritize availability. In practice, this means that during failover, some of the last few transactions on the old primary might not make it to the new primary. Altair reduces this risk by letting replicas catch up on relay logs whenever possible and by making planned failovers read-only before switching roles. But in unplanned crashes, a small amount of data loss is possible. Smarter Health ChecksOne of Altair’s biggest strengths is how it avoids false alarms. Instead of just checking whether a few signals are missed, it uses multiple sources of truth:

This layered approach prevents unnecessary failovers that could disrupt the system when the primary is actually fine. Simplified Service DiscoveryAltair uses DNS indirection to make failovers smooth. By updating the DNS record of the primary after promotion, applications automatically connect to the new primary without needing to change code or restart in most cases. This keeps the system simpler for developers who build on top of it. Scalable Monitoring DesignAltair’s monitoring system is designed to scale as Flipkart grows:

This separation of responsibilities ensures both reliability and scalability. ConclusionAltair represents Flipkart’s answer to the difficult problem of keeping relational databases highly available at a massive scale. By standardizing on a primary–replica setup with asynchronous replication, the engineering team ensured that MySQL could continue to serve as the backbone for critical transactional systems. The system emphasizes write availability, while carefully minimizing data loss through relay log catch-up and planned read-only failovers. Altair’s layered monitoring design (combining agents, monitors, ZooKeeper, and an orchestrator) allows reliable detection of failures without triggering false positives. Service discovery through DNS updates keeps application integration simple, while fencing mechanisms and procedural safeguards protect against the dangerous risk of split-brain. The system also scales horizontally, supervising thousands of clusters across Flipkart’s microservices. The key trade-off is accepting the possibility of minor data loss in exchange for fast, automated recovery. By doing so, Altair balances consistency, availability, and operational simplicity in a way that matches Flipkart’s business needs. In practice, this design has reduced operational overhead and delivered dependable high availability during peak events, making MySQL a reliable foundation for Flipkart’s e-commerce platform. References: SPONSOR USGet your product in front of more than 1,000,000 tech professionals. Our newsletter puts your products and services directly in front of an audience that matters - hundreds of thousands of engineering leaders and senior engineers - who have influence over significant tech decisions and big purchases. Space Fills Up Fast - Reserve Today Ad spots typically sell out about 4 weeks in advance. To ensure your ad reaches this influential audience, reserve your space now by emailing sponsorship@bytebytego.com. |

#6164 The House of Tesla Genres/Tags: Logic, Puzzle, First-person, 3D Company: Blue Brain Games Languages: ENG/MULTI8 Original Size: 6.8 GB Repack Size: 4.1 GB Download Mirrors (Direct Links) .dlinks {margin: 0.5em 0 !important; font-size… Read on blog or Reader FitGirl Repacks Read on blog or Reader The House of Tesla By FitGirl on 26/09/2025 #6164 The House of Tesla Genres/Tags: Logic, Puzzle, First-person,...

Comments

Post a Comment