Free ticket to P99 CONF — 60+ low-latency engineering talks (Sponsored)P99 CONF is the technical conference for anyone who obsesses over high-performance, low-latency applications. Engineers from Pinterest, Prime Video, Clickhouse, Gemini, Arm, Rivian and VW Group Technology, Meta, Wayfair, Disney, Uber, NVIDIA, and more will be sharing 60+ talks on topics like Rust, Go, Zig, distributed data systems, Kubernetes, and AI/ML. Join 20K of your peers for an unprecedented opportunity to learn from experts like Chip Huyen (author of the O’Reilly AI Engineering book), Alexey Milovidov (Clickhouse creator/CTO), Andy Pavlo (CMU professor) and more – for free, from anywhere. Bonus: Registrants are eligible to enter to win 300 free swag packs, get 30-day access to the complete O’Reilly library & learning platform, plus free digital books. Disclaimer: The details in this post have been derived from the official documentation shared online by the Anthropic Engineering Team. All credit for the technical details goes to the Anthropic Engineering Team. The links to the original articles and sources are present in the references section at the end of the post. We’ve attempted to analyze the details and provide our input about them. If you find any inaccuracies or omissions, please leave a comment, and we will do our best to fix them. Open-ended research tasks are difficult to handle because they rarely follow a predictable path. Each discovery can shift the direction of inquiry, making it impossible to rely on a fixed pipeline. This is where multi-agent systems become important By running several agents in parallel, multi-agent systems allow breadth-first exploration, compress large search spaces into manageable insights, and reduce the risk of missing key information. Anthropic’s engineering team also found that this approach delivers major performance gains. In internal evaluations, a system with Claude Opus 4 as the lead agent and Claude Sonnet 4 as supporting subagents outperformed a single-agent setup by more than 90 percent. The improvement was strongly linked to token usage and the ability to spread reasoning across multiple independent context windows, with subagents enabling the kind of scaling that a single agent cannot achieve. However, the benefits also come with costs:

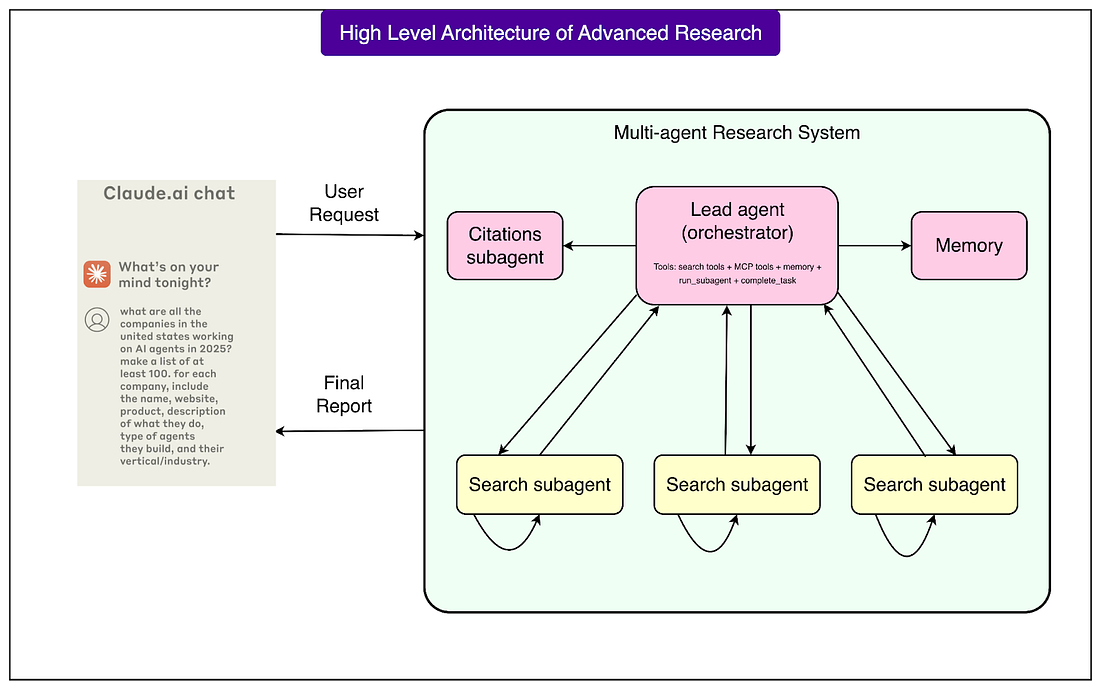

Despite these trade-offs, multi-agent systems are proving to be a powerful way to tackle complex, breadth-heavy research challenges. In this article, we will understand the architecture of the multi-agent research system that Anthropic built. The Architecture of the Research SystemThe research system is built on an orchestrator-worker pattern, a common design in computing where one central unit directs the process and supporting units carry out specific tasks. In this case, the orchestrator is the Lead Researcher agent, while the supporting units are subagents that handle individual parts of the job. Here are the details about the same:

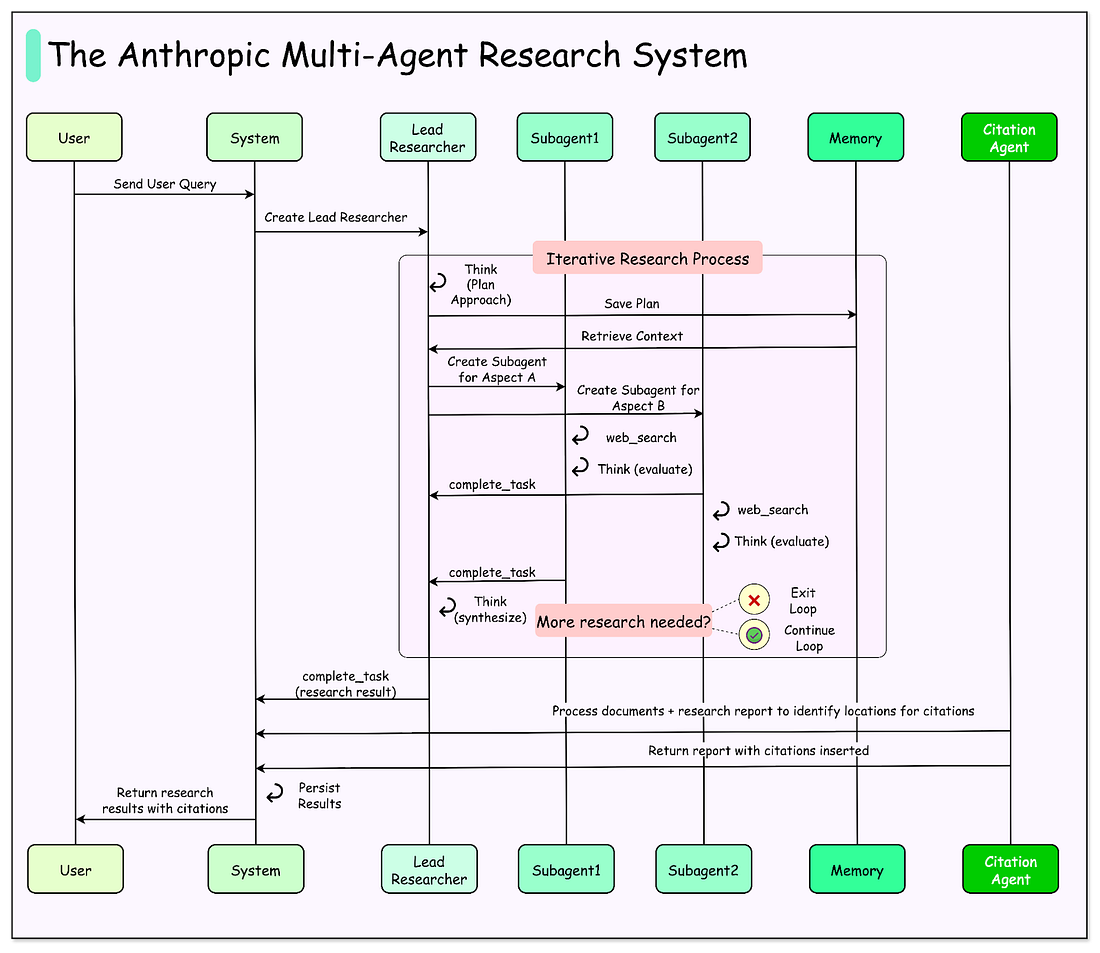

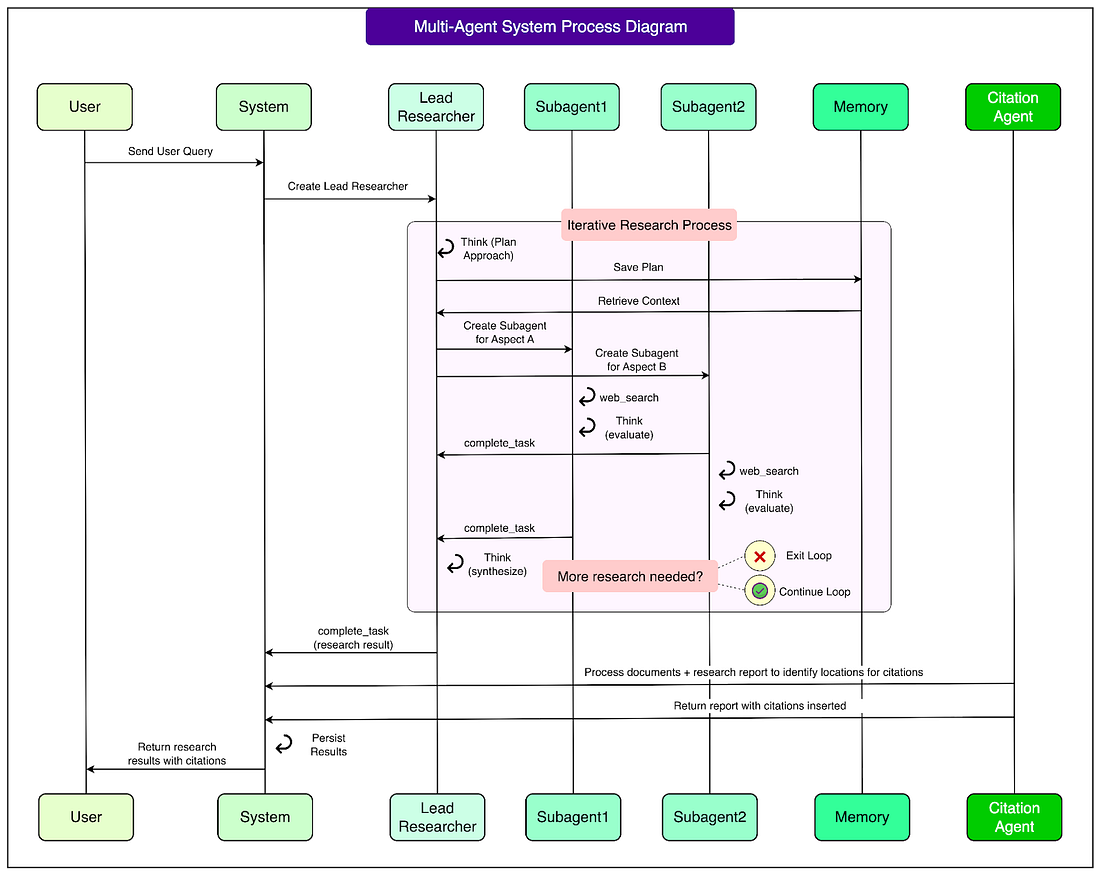

See the diagram below that shows the high level architecture of these components: This design differs from traditional Retrieval-Augmented Generation (RAG) systems. In standard RAG, the model retrieves a fixed set of documents that look most similar to the query and then generates an answer from them. The limitation is that retrieval happens only once, in a static way. The multi-agent system operates dynamically: it performs multiple rounds of searching, adapts based on the findings, and explores deeper leads as needed. In other words, it learns and adjusts during the research process rather than relying on a single snapshot of data. The complete workflow looks like this:

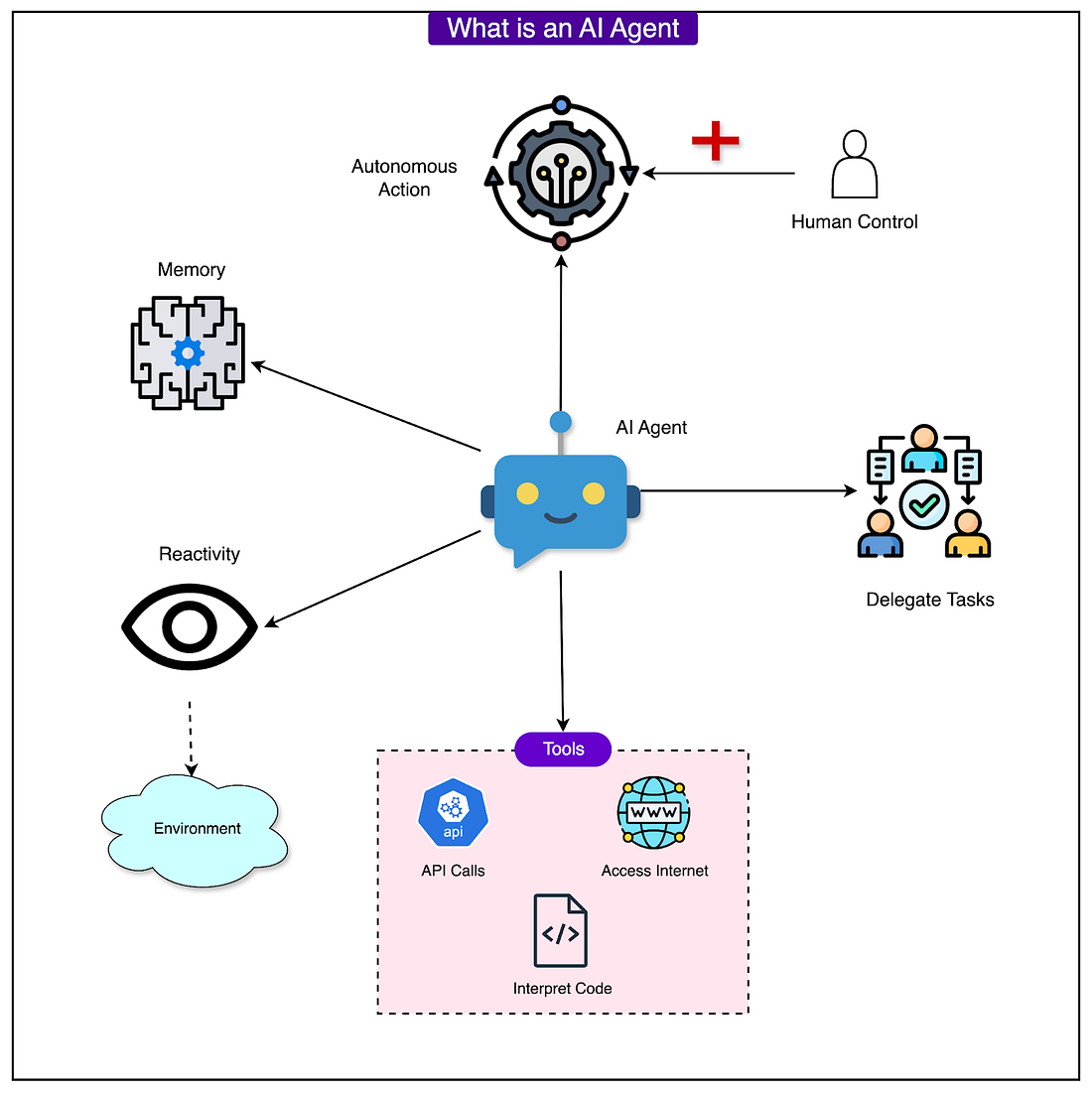

See the diagram below for more details: This layered system allows for flexibility, depth, and accountability. The Lead Researcher ensures direction and consistency, subagents provide parallel exploration and scalability, and the Citation Agent enforces accuracy by tying results back to sources. Together, they create a system that is both more powerful and more reliable than single-agent or static retrieval approaches. Prompt Engineering PrinciplesDesigning good prompts turned out to be the single most important way to guide how the agents behaved. Since each agent is controlled by its prompt, small changes in phrasing could make the difference between efficient research and wasted effort. Through trial and error, Anthropic identified several principles that made the system work better. 1 - Think like your agentsTo improve prompts, the engineering team built simulations where agents ran step by step using the same tools and instructions they would in production. Watching them revealed common mistakes. Some agents kept searching even after finding enough results, others repeated the same queries, and some chose the wrong tools. By mentally modeling how the agents interpret prompts, engineers could predict these failure modes and adjust the wording to steer agents toward better behavior. See the diagram below to understand the concept of an agent on a high level: 2 - Teach delegationThe Lead Researcher is responsible for breaking down a query into smaller tasks and passing them to subagents. For this to work, the instructions must be very clear: each subagent needs a concrete objective, boundaries for the task, the right output format, and guidance on which tools to use. Without this level of detail, subagents either duplicated each other’s work or left gaps. For example, one subagent looked into the 2021 semiconductor shortage while two others repeated nearly identical searches on 2025 supply chains. Proper delegation avoids wasted effort. 3 - Scale effort to query complexityAgents often struggle to judge how much effort a task deserves. To prevent over-investment in simple problems, scaling rules were written into prompts. For instance:

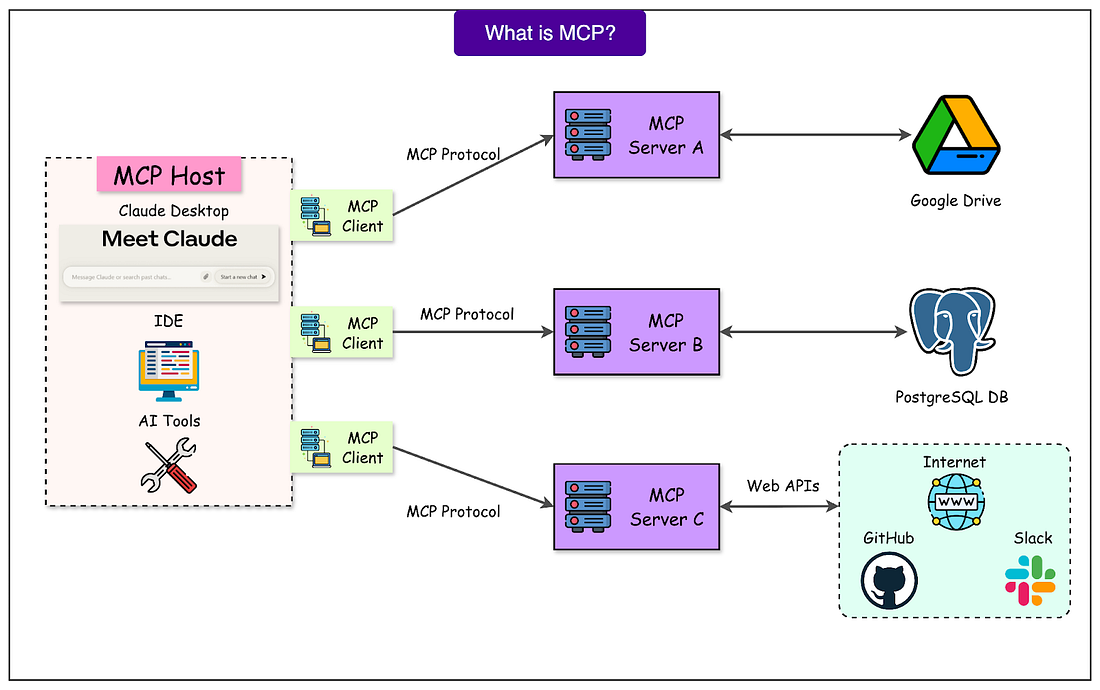

These built-in guidelines helped the Lead Researcher allocate resources more effectively. 4 - Tool design mattersThe way agents understand tools is as important as how humans interact with software interfaces. A poorly described tool can send an agent down the wrong path entirely. For example, if a task requires Slack data but the agent only searches the web, the result will fail. With MCP servers that give the model access to external tools, this problem can be compounded since agents encounter unseen tools with varying quality. See the diagram below that shows the concept of MCP or Model Context Protocol. To solve this, the team gave agents heuristics such as:

Each tool was carefully described with a distinct purpose so that agents could make the right choice. 5 - Let agents improve themselvesClaude 4 models proved capable of acting as their own prompt engineers. By giving them failing scenarios, they could analyze why things went wrong and suggest improvements. Anthropic even created a tool-testing agent that repeatedly tried using a flawed tool, then rewrote its description to avoid mistakes. This process cut task completion times by about 40 percent, because later agents could avoid the same pitfalls. 6 - Start wide, then narrow downAgents often defaulted to very specific search queries, which returned few or irrelevant results. To fix this, prompts encouraged them to begin with broad queries, survey the landscape, and then narrow their focus as they learned more. This mirrors how expert human researchers work. 7 - Guide the thinking processAnthropic used extended thinking and interleaved thinking as controllable scratchpads. Extended thinking allows the Lead Researcher to write out their reasoning before acting, such as planning which tools to use or how many subagents to create. Subagents also plan their steps and then, after receiving tool outputs, use interleaved thinking to evaluate results, spot gaps, and refine their next queries. This structured reasoning improved accuracy and efficiency. 8 - Use parallelizationEarly systems ran searches one after another, which was slow. By redesigning prompts to encourage parallelization, the team achieved dramatic speedups. The Lead Researcher now spawns several subagents at once, and each subagent can use multiple tools in parallel. This reduced research times by as much as 90 percent for complex queries, making it possible to gather broad information in minutes instead of hours. Evaluation MethodsEvaluating multi-agent systems is difficult because they rarely follow the same steps to reach an answer. Anthropic used a mix of approaches to judge outcomes rather than strict processes.

Production Engineering ChallengesRunning multi-agent systems in production introduces reliability issues that go beyond traditional software.

ConclusionBuilding multi-agent systems is far more challenging than building single-agent prototypes. Small bugs or errors can ripple through long-running processes, leading to unpredictable outcomes. Reliable performance requires proper prompt design, durable recovery mechanisms, detailed evaluations, and cautious deployment practices. Despite the complexity, the benefits are significant. Multi-agent research systems have shown they can uncover connections, scale reasoning across vast amounts of information, and save users days of work on complex tasks. They are best suited for problems that demand breadth, parallel exploration, and reliable sourcing. With the right engineering discipline, these systems can operate at scale and open new possibilities for how AI assists with open-ended research. References: Help us Make ByteByteGo Newsletter BetterTL:DR: Take this 2-minute survey so I can learn more about who you are,. what you do, and how I can improve ByteByteGo SPONSOR USGet your product in front of more than 1,000,000 tech professionals. Our newsletter puts your products and services directly in front of an audience that matters - hundreds of thousands of engineering leaders and senior engineers - who have influence over significant tech decisions and big purchases. Space Fills Up Fast - Reserve Today Ad spots typically sell out about 4 weeks in advance. To ensure your ad reaches this influential audience, reserve your space now by emailing sponsorship@bytebytego.com. |

Don't miss a thing Confirm your subscription Hi there, Thanks for subscribing to fitgirl-repacks.site! To get you up and running, please confirm your email address by clicking below. This will set you up with a WordPress.com account you can use to manage your subscription preferences. By clicking "confirm email," you agree to the Terms of Service and have read the Privacy Policy . Confirm email ...

Comments

Post a Comment